Relevance Realization, Cerebral Hemispheres, and the Reconciliation of Science and Mythology

Integrating the work of John Vervaeke, Iain McGilchrist, and Jordan Peterson

Iain McGilchrist’s 2009 book The Master and His Emissary is destined to be a classic. Even if he’s wrong about any particular idea in the book, the scope of his thesis and the amount of evidence he brings to bear on it makes it interesting and useful. McGilchrist claims that cultures can come to take on the characteristics of the left or right cerebral hemisphere and that modern Western culture is dangerously tilted towards the cognitive style of the left hemisphere.

There are some people, who I affectionately think of as “skeptards”, who display a knee-jerk dismissal of any discussion of hemispheric differences. For whatever reason, some neuroscientists have sought to discredit discussion of hemispheric differences by calling them a “myth” or dismissing them as “pop science”. These neuroscientists are usually only referring to individual differences in hemispheric functioning, but this nuance tends to be lost on their audience. The idea that hemispheric differences are a “myth” has seeped into mainstream intellectual culture so that some people now assume that any discussion of hemispheric differences is unscientific.

As somebody who has spent hundreds of hours reading the scientific literature on hemispheric differences, I can say with confidence that there is overwhelming evidence for important and systematic differences between the cerebral hemispheres. This is as true of other animals as it is of humans (see Rogers, Vallortigara, and Andrew’s 2013 book Divided Brains for a review of the animal literature). How we should understand those differences is a matter of ongoing debate. But nobody who reads this literature with a functioning brain can deny that the differences are real and important. Although I have disagreements with some details of his treatment, Iain McGilchrist’s review of hemispheric differences in chapter 2 of The Master and His Emissary is extremely thorough and supported by a large number of mainstream scientific publications, which he meticulously references in both of his books.

In this post I am going to make a claim that supports McGilchrist’s overall thesis, although I’m not so sure he would agree with it. My take on the functioning of the hemispheres may differ in some ways from his. In 2008 the cognitive neuroscientist Joseph Dien published the Janus model of hemispheric differences. In this post I will draw on the Janus model to argue that different cognitive strategies are more or less useful depending on the predictability of the current environment. The left hemisphere creates highly particular and precise cognitive models that can be used to predict and control the world around us. This strategy is useful if the world is actually predictable, but is not so useful if you find yourself in novel or unpredictable circumstances. Precise models have the problem of not being very generalizable. On the other hand, the strategy of the right hemisphere is to create a less detailed but more generalizable model of the world that is useful in novel and unpredictable situations.

Iain McGilchrist has argued that the fundamental difference between the cerebral hemispheres consists of the type of attention they pay to the world. The left hemisphere pays a narrow, focused, detail-oriented kind of attention to the world while the right hemisphere pays a more broad and open kind of attention. I agree. I think this hypothesis can be made more precise by integrating it with modern cognitive science. Predictive processing is an emerging framework in cognitive science that characterizes attention as a process of “precision-weighting” (a concept I will unpack further down in this post). John Vervaeke is a cognitive scientist and collaborator of mine (well-known for his “Awakening from the Meaning Crisis” series on YouTube) who has published multiple papers in which he characterizes the process by which we allocate our attention as “relevance realization”.

Recently, myself, Mark Miller, and John Vervaeke published a paper arguing that precision-weighting is, in fact, the predictive processing account of relevance realization. Despite their different intellectual background and vocabulary, precision-weighting and relevance realization are describing the same process. Supporting our thesis, we provided evidence that the tradeoffs inherent to precision-weighting are also inherent to relevance realization. In this post I will argue that these same tradeoffs characterize the cerebral hemispheres. If so, this means that the fundamental difference between the cerebral hemispheres consists of how they “realize relevance”, which manifests as a difference in how they allocate their attention.

Science is involved in creating highly precise, specialized models that can be used to predict and control the world. Multiple scientists have recently proposed that the function of mythology and supernatural beliefs is to provide more generalizable models of the world (e.g., here and here). Could this difference between science and mythology be analogous to the hemispheric differences described by the Janus model? I will draw on Jordan Peterson’s 1999 book Maps of Meaning to explore this possibility too.

Today we find ourselves in a world that is perhaps more novel and unpredictable than any other moment in human history. I will conclude this post by suggesting that the strategy of the right hemisphere, which looks to the past for guidance in the present, and which promotes resilience in the face of radical novelty and uncertainty, will be necessary for navigating this tumultuous period.

The Janus Model of Hemispheric Differences

Janus was the name of a Roman god with two faces. He could look into the future with one face and into the past with the other.

Cognitive neuroscientist Joseph Dien’s Janus model of hemispheric differences is so named because he believes that the brain has a dual nature similar to that of the Roman god Janus. In Dien’s words, the Janus model “proposes that the left hemisphere is generally specialized to anticipate multiple possible futures while the right hemisphere is generally specialized to integrate ongoing strands of information into a single unitary view of the past that it can then use to respond to events as they occur” (p. 305). The left hemisphere wants to make plans and execute them. The right hemisphere builds a unitary model based on past experience so that it can react to stimuli in the present. This means that the left hemisphere tends to be “proactive” while the right hemisphere tends to be “reactive”. Each of these strategies can be useful, of course, and we need both of them. Their utility will depend on context in ways I will discuss later.

There are some obvious ways that the Janus model can be misunderstood. Dien is not claiming, for example, that the left hemisphere doesn’t learn from the past or that the right hemisphere never engages in prediction. Both hemispheres are learning from and predicting the world. They just do it in different ways:

… the LH proactive focus does not preclude an ability to learn from experiences to improve its predictions while the RH reactive focus does not denote a focus on memory per se but rather on using the past to detect novel events and responding to them. In some sense, both hemispheric representations involve a type of expectation but those of the LH take the form of discrete predictions whereas those of the RH take the form of a baseline summary of past experiences against which deviations, anomalies, and novelties can be detected… (Dien, 2008 p. 306)

I’m not going to review evidence for the Janus model in detail here. The paper is free online if you want those kinds of details (see here). For our purposes, what’s important to understand about the Janus model is that it is an adaptationist hypothesis about the hemispheres. This means that Dien is trying to explain the function of the hemispheres, but his model is agnostic about the actual mechanism or mechanisms that implement this function. Further down I will suggest that tradeoffs inherent to relevance realization can provide a kind of mechanistic explanation.

The other most prominent adaptationist hypothesis of hemispheric functioning is the novelty-routinization hypothesis (see Elkhonen Goldberg’s 2016 book The New Executive Brain for a recent treatment). This theory was first put forward by Goldberg and Costa in 1981, and argues that the left hemisphere is specialized for routine situations while the right hemisphere is specialized for novel situations. In 2009 a trio of animal researchers (MacNeilage, Rogers, & Vallortigara) published an article in Scientific American putting forward a very similar theory drawing on animal research rather than human research. I haven’t seen any of these three cite Goldberg in their work so they may have converged on their theory independently.

According to MacNeilage and colleagues, the fundamental difference between the hemispheres in all vertebrates is that the left hemisphere “must determine whether the stimulus fits some familiar category, so as to make whatever well-established response, if any, is called for” while the right hemisphere “must estimate the overall novelty of the stimulus and take decisive emergency action if needed” (MacNeilage et al., 2009 p. 66). In other words, the left hemisphere applies well-established responses to routine situations while the right hemisphere estimates and reacts to novelty.

The Janus model does not contradict the novelty-routinization theory, but incorporates it. Being predictive and proactive (i.e., future-facing) is more useful when dealing with predictable, routine situations. Thus, the left hemisphere is expected to deal more with routine situations according to the Janus model. Being reactive, on the other hand, is more useful in novel and unpredictable situations. You cannot plan ahead if you have no idea what’s going to happen next. In radically novel situations you therefore need a more generalizable model of the world (which is created through incorporating general principles from past experiences) that can be applied to almost anything. That is the job of the right hemisphere according to the Janus model.

Relevance Realization and the Hemispheres

A common observation among those who study hemispheric differences is that the left hemisphere pays attention to local detail while the right hemisphere pays attention to global patterns. In The Master and His Emissary, Iain McGilchrist stated that:

There is a need to focus attention narrowly and with precision, as a bird, for example, needs to focus on a grain of corn that it must eat, in order to pick it out from, say, the pieces of grit on which it lies. At the same time there is a need for open attention, as wide as possible, to guard against a possible predator. That requires some doing. It’s like a particularly bad case of trying to rub your tummy and pat your head at the same time – only worse, because it’s an impossibility. Not only are these two different exercises that need to be carried on simultaneously, they are two quite different kinds of exercise, requiring not just that attention should be divided, but that it should be of two distinct types at once. (p. 25)

In their 2009 paper, MacNeilage and colleagues suggested that this problem, which is analogous to trying to “rub your tummy and pat your head at the same time”, drove the evolution of hemispheric differences in vertebrates. We need to simultaneously pay two very different kinds of attention to the world and for that we need two functionally separate neural networks.

The left hemisphere focuses narrowly and with precision while the right hemisphere pays a more broad and open kind of attention to the world. This hypothesis can be made more precise by drawing on modern cognitive science accounts of attention. Predictive processing is an emerging framework within cognitive science that characterizes the mind as proactively predicting the world and attempting to reduce long-term errors in its predictions. Predictive processing is a variant of the Bayesian brain hypothesis, which suggests that the brain is doing something like hierarchical Bayesian inference. I’m not going to try to unpack Bayesian inference here. I’m just going to try to explain the predictive processing account of attention as intuitively as I can.

The predictive processing account of attention

Scientists used to think that perception was a process by which you take in a bunch of sensory input and then process all of that input to create a rich, detailed mental model of the world. That idea is wrong. In fact, at any given moment you are taking in much less sensory input than your visual experience would indicate. Instead, your brain is always predicting what your sensory input is going to be and that prediction (rather than sensory input) is most of what you perceive at any given moment. All that your brain actually processes, in this view, is the difference between your internally generated prediction and the sensory input. In other words, your brain only processes prediction errors. This is far more efficient than trying to process everything, which would be technically impossible. You can now effectively ignore anything that doesn’t produce prediction error.

This means that perception is always a combination of top-down expectation and bottom-up sensory input. But this understanding of perception creates a dilemma. Sometimes your predictions will be wrong and sometimes the bottom-up input will be unreliable. How much should you rely on your predictions and how much should you rely on bottom-up input?

Consider the following example (taken from Andy Clark’s book Surfing Uncertainty). Suppose you are driving at night on a foggy stretch of highway that you have driven many times in the past. Visibility is poor, but the road is so familiar that you can almost drive it by habit. How much “weight” should you give to bottom-up sensory input and how much to your own internal prediction? In this situation you might want to give more weight to your own prediction than you normally would because your prediction is based on many experiences (and is likely to be reliable) while the sensory input is unreliable because it’s dark and foggy.

In that situation the tradeoff is relatively obvious. But in many situations it is far from obvious how much weight should be given to top-down predictions and how much weight should be given to bottom-up sensory input. It will depend in part on how “precise” your predictions are and how “precise” you think the sensory input is. You should give more “weight” (i.e., influence) to more precise (and therefore more reliable) sensory input. This is why it’s called “precision-weighting”. Precision-weighting determines the relative influence of bottom-up sensory input and top-down predictions (although that’s not all it does). Precision-weighting is the predictive processing account of attention. When we pay attention to something, it means we are treating that input as if it’s highly precise.

Precision-weighting and relevance realization

Last year I published a paper with my collaborators Mark Miller and John Vervaeke in which we argued that precision-weighting is, in fact, the predictive processing account of relevance realization. This is a little bit surprising since it’s not immediately obvious that these concepts are referring to the same process. Predictive processing and relevance realization have a different intellectual background and use a different vocabulary.

In developing the theory of relevance realization, Vervaeke and colleagues recognized that many problems in cognitive science were actually different manifestations of a single problem, which can be stated as such: How is it that cognitive agents intelligently ignore the vast majority of the world that is irrelevant to them and zero in on those aspects of the world that are relevant?

Vervaeke and colleagues argued that this problem cannot be solved by having an explicit list or set of rules. The category of “relevance” is so context-dependent that there is no way to have a list or set of rules that will always cover it. This is similar to how Darwinian evolution does not describe a set of properties or rules that constitute “fitness”. Instead, Darwinian evolution describes a process by which fitness is determined through variation and selection. Relevance realization must also describe a process.

Vervaeke and colleagues argue that this process is characterized by a set of opponent-processing relationships or tradeoffs. These tradeoffs include:

Specialization vs. Generalization

Focusing vs. Diversifying

Exploitation vs. Exploration

What we showed in our recent paper is that all of the tradeoffs that characterize relevance realization also characterize precision-weighting. Precision-weighting, we suggested, is therefore the predictive processing account of relevance realization. I think all of these tradeoffs also characterize the differences between the hemispheres. I will briefly review my reasons for thinking this.

1. Specialization vs. Generalization

Vervaeke and colleagues argued that there is a cognitive tradeoff between producing special purpose machinery and general purpose machinery. They demonstrated this tradeoff with the example of an algorithm used to train neural networks. The algorithm can be set up so that the network will be precisely fitted to the particular data that it is trained on (meaning that it will be highly specialized for a particular data set) or so that the network’s performance will be able to generalize to new datasets. Below I will suggest that this tradeoff also characterizes the cerebral hemispheres.

Elkhonon Goldberg is a pioneer in the study of hemispheric differences and has been publishing on the topic for more than 40 years. In his 2005 book The Wisdom Paradox he uses an analogy from statistics to describe how the hemispheres differ from each other:

In descriptive statistics, the same set of data can be represented in two different ways: as group data and as a cloud of individual data points. The first representation is a grand average that captures the essence of the totality of all previous experiences, but in which the details, the specifics, are lost. The second representation is a library of specific experiences, but without the ability to extract the essential generalities.

Group data are represented by means and standard deviations. By contrast, individual data points are represented by scatter-plot diagrams. When new information arrives, the two respective representations will be updated in two very different ways. The group data will have to be recalculated every time such new information is received, resulting in a new mean and a new standard deviation. By contrast, the scatter-plot diagram will be updated by merely adding individual new data points.

Think of the right hemisphere as representing the organism’s cumulative knowledge through some cortical means and standard deviations of sorts, as the “grand means” of all prior experiences, but with the loss of details. Think of the left hemisphere as a cortical scatter-plot diagram of sorts, as a library of relatively specific representations, each corresponding to a relatively narrow class of similar situations. (ch. 11)

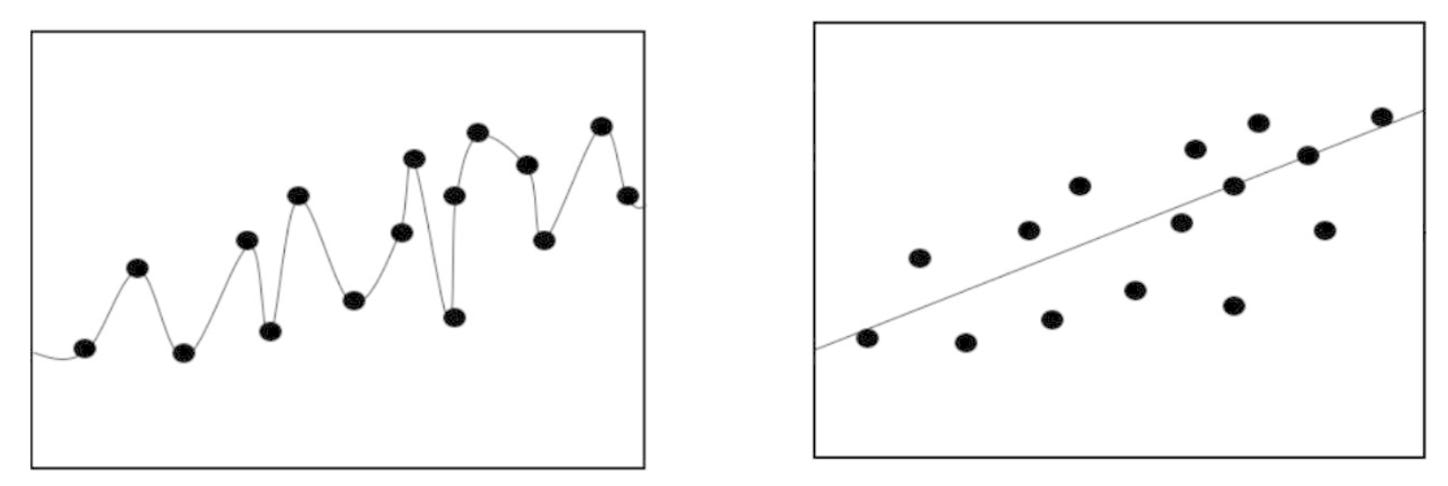

Goldberg uses the figure below as a visual demonstration of what he means (with A corresponding to the left hemisphere, B to the right hemisphere).

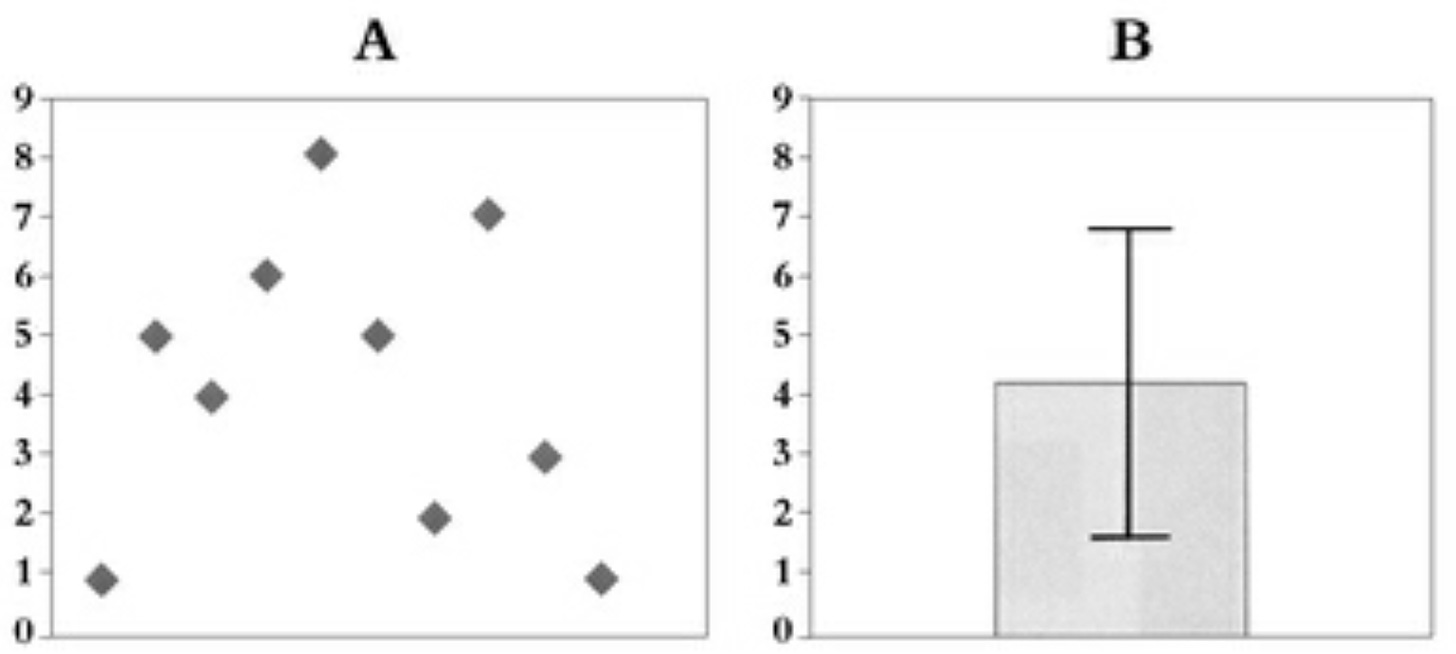

Goldberg suggests that the left hemisphere stores information in the form of detailed data points while the right hemisphere stores information in the form of larger patterns like averages and standard deviations. This is an analogy, of course, but Goldberg thinks that it adequately captures a fundamental difference between the hemispheres. Below is one of the figures we used in our recent paper to describe the tradeoffs associated with precision-weighting. Compare Goldberg’s analogy with our representation of precision-weighting.

The lines in these images represent a “cognitive model” and the dots represent sensory input. The image on the left is what happens when you give a high weight to sensory input. Giving a high weight to sensory input means that you’re going to treat each data point as if it’s very important. Your model must therefore precisely account for each data point (like the left image). The image on the right is what happens when you give a low weight to sensory input. Giving a low weight to sensory input means that each individual data point isn’t as important. Some data points will be effectively ignored so that you can find the “line of best fit” (like the right image).

The model in the left image adequately captures the details but loses out on the “big picture”. The model in the right image captures the big picture but loses out on the details. As Goldberg put it, the left hemisphere is like “a library of specific experiences, but without the ability to extract the essential generalities” while the right hemisphere is more like “a grand average that captures the essence of the totality of all previous experiences, but in which the details, the specifics, are lost.” I think that the model we have used to describe tradeoffs in precision-weighting elegantly captures this difference. The left image therefore represents the cognitive-perceptual style of the left hemisphere while the right image represents the cognitive-perceptual style of the right hemisphere.

My contention, therefore, is that the left hemisphere gives a relatively high weight to sensory input while the right hemisphere gives a relatively low weight to sensory input. Since precision-weighting is the predictive processing account of relevance realization, this means that the fundamental difference between the hemispheres is in how they realize relevance.

The left hemisphere is supposed to create lots of specialized, highly detailed models to deal with familiar circumstances as efficiently as possible. Paying attention to detail would be important for that. The right hemisphere is supposed to create a unitary, more generalizable model that is capable of dealing with many different circumstances, which will be useful when dealing with novel situations. When constructing a generalizable model it’s important to ignore some details to pick up on larger patterns.

To put it simply, specialists pay attention to detail and generalists pay attention to the big picture. The left hemisphere is a specialist and the right hemisphere is a generalist.

2. Focusing vs. Diversifying

There is a fundamental tradeoff between focusing one’s efforts on one or a few goals and diversifying one’s efforts across many goals. Vervaeke and colleagues suggested that this is a fundamental tradeoff that characterizes relevance realization. In relation to this, consider Iain McGilchrist’s characterization of problem solving in the hemispheres:

[With problem solving] the right hemisphere presents an array of possible solutions, which remain live while alternatives are explored. The left hemisphere, by contrast, takes the single solution that seems best to fit what it already knows and latches onto it. V. S. Ramachandran’s studies of anosognosia reveal a tendency for the left hemisphere to deny discrepancies that do not fit its already generated schema of things. The right hemisphere, by contrast, is actively watching for discrepancies, more like a devil’s advocate. These approaches are both needed, but pull in opposite directions. (2009, p. 41)

This is plausibly interpreted as the left hemisphere “focusing” on a single solution while the right hemisphere “diversifies” by presenting an array of possible solutions. Each of these strategies is necessary. If we never focused in on a single solution we would be paralyzed by uncertainty. At some point we just have to make a decision and go with it. On the other hand, if we never considered multiple solutions we would be unable to change course when necessary. This characterization of hemispheric differences in problem solving suggests that the focusing-diversifying tradeoff also applies to the cerebral hemispheres.

3. Exploitation-Exploration

All organisms are faced with the problem of whether to take advantage of current opportunities or search for better ones. Should I continue to eat from the current patch of berries or leave to find a better one? Should I marry my current partner or keep dating? Should I buy the car I just test drove or keep searching? These exploration-exploitation tradeoffs are ubiquitous in our own lives and in the lives of all organisms. Vervaeke and colleagues suggest that this tradeoff is one aspect of relevance realization.

The left hemisphere tends to focus on what’s right in front of it while the right hemisphere is on the lookout for threats and opportunities on the periphery. This difference makes it relatively obvious that the left hemisphere “exploits” what’s in front of it while the right hemisphere “explores” for other opportunities. Nevertheless, the language of exploit and explore is not used very much in the scientific literature on hemispheric differences.

One exception is a 2012 paper by James Danckert and colleagues which used a repeated rock-paper-scissors game to test how much patients with different kinds of brain damage (to the left or right hemisphere) would explore different strategies. Their results found that the left brain damaged group, who presumably used their right hemisphere more, explored more of the game’s strategy space than the right brain damaged group. This indicates that the right hemisphere was more involved in exploring new strategies. This paper provides some direct evidence for an increased exploratory tendency associated with the right hemisphere.

Back to the Janus model

Thus, the tradeoffs that characterize relevance realization plausibly apply to the functioning of the hemispheres. Differences in precision-weighting can elegantly explain these tradeoffs (see here). I think this idea can provide a proximate, mechanistic explanation of the Janus model.

Why would being precise correlate with looking towards the future more? And why would being a less precise generalist make one look to the past? Having precise plans and predictions isn’t useful unless the future is at least somewhat predictable. In the domains and situations where the future is highly predictable, it is therefore useful to be more precise.

When the future is unpredictable, it’s obviously not as useful to make precise plans and predictions. On the other hand, having a more fuzzy but generalizable model of the world allows one to have at least some idea of what to do in any circumstance. Extracting generalizable principles and lessons from past experiences requires ignoring details to pick up on larger patterns.

Science & Mythology

On the first page of his 1999 book Maps of Meaning, Jordan Peterson lays out the problem that he will spend the rest of the book trying to solve.

The world can be validly construed as forum for action, or as place of things. The former manner of interpretation — more primordial, and less clearly understood — finds its expression in the arts or humanities, in ritual, drama, literature and mythology. The world as forum for action is a place of value, a place where all things have meaning. This meaning, which is shaped as a consequence of social interaction, is implication for action, or — at a higher level of analysis — implication for the configuration of the interpretive schema that produces or guides action.

The latter manner of interpretation — the world as place of things — finds its formal expression in the methods and theories of science. Science allows for increasingly precise determination of the consensually validatable properties of things, and for efficient utilization of precisely determined things as tools (once the direction such use is to take has been determined, through application of more fundamental narrative processes).

No complete world-picture can be generated without use of both modes of construal. The fact that one mode is generally set at odds with the other means only that the nature of their respective domains remains insufficiently discriminated. (MoM, p. 1)

In Western culture there has been an ongoing conflict between science, which seeks to predict and control, and tradition, which looks to the past for guidance in the present. Could this conflict be analogous to the conflict between the left and right hemisphere?

In comparison to traditional belief systems, science is involved in creating precise, predictive models which can be used to exert tremendous control over the world around us. Scientific research creates life saving medicines and got us to the moon. It also creates weapons of mass destruction and could soon give birth to a superintelligence, the consequences of which are impossible to know in advance.

Science is also extremely modular. Because of how precise scientific research needs to be, scientists tend to become highly specialized and compartmentalized. Scientific generalists are rare and aren’t necessarily that successful. Some scientists spend their entire career studying extremely specific domains like action potentials in neurons or the Krebs cycle. The narrowness of scientific research contributes to its power. Science often gives us highly precise, highly specialized predictive models that help us to exert more control over the world.

The world of religion and mythology is not so concerned with providing precise, predictive models of the world. Mythological narratives clearly cannot cure diseases or get us to the moon. They cannot produce falsifiable hypotheses to test in a lab. Recently, however, some scientists have argued that mythologies and supernatural beliefs do have a kind of epistemic utility, although it is quite different from the kind that science has.

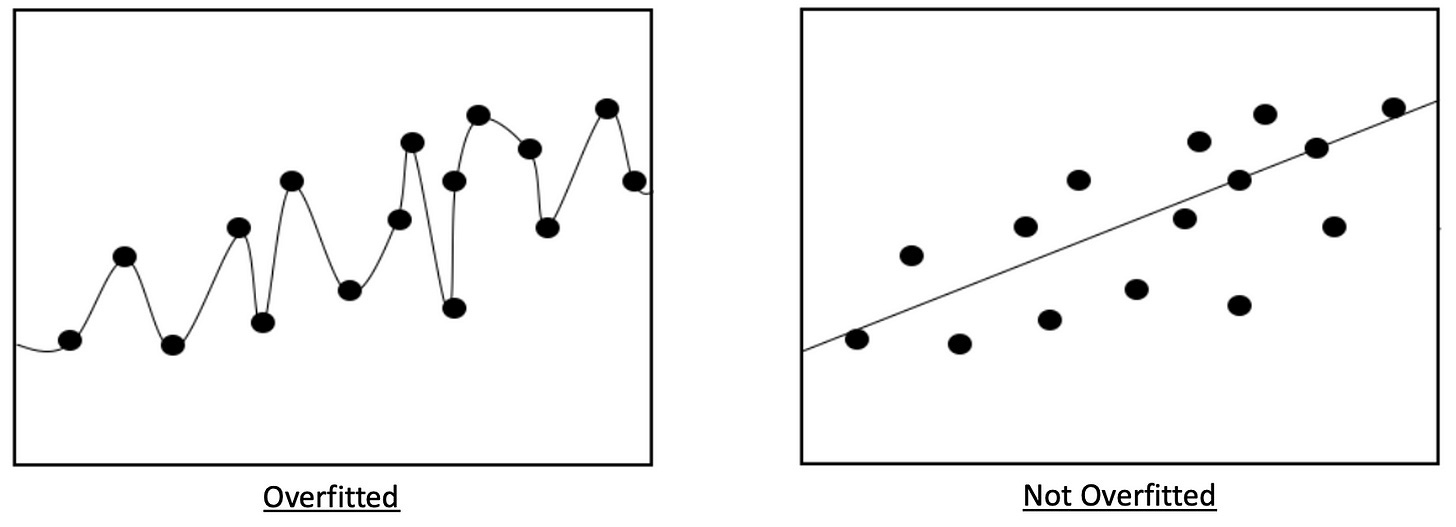

In 2021 neuroscientist Erik Hoel published a paper entitled “The overfitted brain: Dreams evolved to assist generalization”. Although this paper is about dreams, Hoel believes it has implications for our understanding of fiction and mythology. In this paper Hoel suggests that dreams evolved to help organisms avoid overfitting their cognitive models. What does that mean? Consider the figure I used earlier in this post to explain tradeoffs in precision-weighting.

The left image shows a model that is technically overfitted to the data. By trying to fit the model to every little detail, the model ends up missing out on the big picture. The image on the right is properly fitted to the data. It doesn’t attempt to precisely account for every deviation in the data. Rather, it ignores some details to find the line of best fit.

Hoel reviews three phenomenological properties of dreams which, he suggests, provide evidence that they help us to avoid overfitting.

1. “First, the sparseness of dreams in that they are generally less vivid than waking life in that they contain less sensory and conceptual information (i.e., less detail). This lack of detail in dreams is universal, and examples include the blurring of text causing an impossibility of reading, using phones, or calculations.”

2. “Second, the hallucinatory quality of dreams in that they are generally unusual in some way (i.e., not the simple repetition of daily events or specific memories). This includes the fact that in dreams, events and concepts often exist outside of normally strict categories (a person becomes another person, a house a spaceship, and so on).”

3. “Third, the narrative property of dreams, in that dreams in adult humans are generally sequences of events ordered such that they form a narrative, albeit a fabulist one.” (p. 3)

The lack of detail in dreams helps to prevent overfitting because finding general patterns requires ignoring the details of a situation. The unusual quality of dreams helps with generalization because we need our cognitive models to be capable of generalizing to situations that are outside of our normal, everyday experiences. The narrative structure of dreams helps with generalization because a narrative helps us to take a set of facts and order them in such a way that we can extract a big picture lesson from them. Most narratives have some kind of “moral”, which means that they are meant to convey some generalizable lesson about how to act in the world. The narrative structure of dreams indicates that they, too, may be conveying some generalizable implications for how to act.

All of these phenomenological properties also characterize mythological narratives. Mythologies are typically sparse in detail, very unusual compared to normal life (e.g., the world resting on the back of a turtle, gods with two faces, virgin births, etc.), and obviously have a narrative structure. This overlap with the phenomenology of dreams suggests that mythological narratives may perform a similar function as dreams.

In relation to his theory of dreams, consider what Hoel said about the “hero myth”:

It starts with some call to adventure or change, requires the protagonist to pass a guardian, perhaps meeting a helper or mentor before facing their challenges, requires a descent into the abyss, and finishes with transformation and atonement. This is so broad that it can describe Star Wars, Harry Potter, or even, with a bit of interpretation, Pride and Prejudice. Our desires and goals, our moral landscape, take just as much from the fictional as from the real. And that can be a good thing. There is a sense in which something like the hero myth is actually more true than reality, since it offers a generalizability impossible for any true narrative to possess. (Hoel, 2019)

Hoel suggests that these mythological narratives serve the same basic function as dreams. They help us to avoid overfitting by providing a more generalizable model of the world. In that sense, these mythologies may also serve the same purpose as the right hemisphere according to the Janus model. They provide a unitary, generalizable model of the world that can be used to respond appropriately in novel situations. This is concordant with Jordan Peterson’s argument about the function of myth in Maps of Meaning. Peterson suggested that the hero myth describes “what to do when you no longer know what to do”. In my previous post about Erik Hoel’s theory I integrated it with Jordan Peterson’s hypothesis about the emergence of the hero myth over the course of human history. I’ll briefly review that idea here.

Peterson argued that human beings have always closely observed people who behave admirably. We imitate these people and tell stories about them. But what happens when you accumulate hundreds of stories about admirable figures? It seems natural that human beings, who so readily engage in abstraction, would attempt to extract general patterns and principles from these more particular stories. We do this by attempting to tell a story that will incorporate all of the wisdom inherent to the more particular stories. Peterson describes this process as such:

All specific adaptive behaviors (which are acts that restrict the destructive or enhance the beneficial potential of the unknown) follow a general pattern. This "pattern" — which at least produces the results intended (and therefore desired) — inevitably attracts social interest. "Interesting" or "admirable" behaviors engender imitation and description. Such imitation and description might first be of an interesting or admirable behavior, but is later of the class of interesting and admirable behaviors. The class is then imitated as a general guide to specific actions; is redescribed, redistilled and imitated once again. The image of the hero, step by step, becomes ever clearer, and ever more broadly applicable. (MoM, p. 180)

This “image of the hero” therefore provides a “general guide to specific action”. Whether in the form of ritual, art, or myth this will necessarily be a fiction. But as Hoel said earlier, there is a sense in which this kind of fiction can be more true than reality if it offers a more generalizable understanding of how to act in the world.

We have observed many particular admirable people and have attempted to extract the “line of best fit”, or the general pattern that underlies their behavior. That general pattern is the “monomyth” or “metamythology” described by Joseph Campbell and Jordan Peterson. The image below is meant to represent this process.

Independently of Hoel, two evolutionary anthropologists recently published a paper in Human Nature in which they make a similar argument about the function of supernatural beliefs. Aaron Lightner and Ed Hagen suggest that, although some supernatural beliefs appear to play purely social functions (and do not necessarily have any epistemic utility), others appear to play a different role. In the latter case, they suggest that supernatural belief:

… reflects the workings of specialized cognitive adaptations that are well-suited for generating explanations and predictions about complex and noisy phenomena across a variety of ontological domains… Our capacity for an intuitive psychology, which generates anthropomorphic explanations, is especially well-suited for modeling unobservable, uncertain, and complex processes in terms of high-level concepts, such as intentions. This can improve a bias-variance tradeoff by underspecifying a novel observation’s causal processes, especially when the underlying system is complex and/or not well-understood. (p. 21)

In this context, improving a bias-variance tradeoff has the same meaning as avoiding overfitting. Lightner and Hagen are claiming that supernatural beliefs provide explanations of the world that avoid overfitting when compared to precise mechanistic explanations. Although their theory is probably different from Hoel’s in detail, the core function of supernatural beliefs according to these anthropologists is the same as the core function of myth and other fictions according to Erik Hoel. In both cases, they serve to provide models of the world that avoid overfitting when dealing with complex, noisy phenomena.

Fast and Slow

The process by which scientific beliefs are updated is very different from the process by which mythological narratives are updated. Scientific beliefs can change rapidly based on immediate sensory data. For example, the advent of technology to efficiently analyze ancient DNA has led to a massive shift in the way that scientists think about recent human evolution. Whereas before, ancient patterns of migration had to be pieced together through sparse archaeological data, we now have direct genetic evidence about patterns of migration in the ancient world (see Razib Khan’s substack for ongoing commentary about this research). Opinions among scientists studying these issues have changed rapidly on the basis of incoming evidence. In the realm of science, one experiment or study can sometimes overturn a theory that has been taken for granted for decades.

The process by which mythology changes is very different. Mythologies change on the scale of millenia or centuries rather than months or weeks. Mythology is typically immune to being undermined by any particular sensory data, although it must of course be responsive to the world in some sense. Looking at all of the world’s most important mythologies, we can see that they share a general pattern (i.e., the hero myth), which is a testament to the resilience of that pattern.

Jordan Peterson argued that the hero myth describes “what to do when you no longer know what to do”, i.e., what to do in the face of radical novelty and uncertainty. By contrast, knowing everything there is to know about action potentials in the brain (or just about any other domain of scientific expertise) has very little practical benefit outside of a narrow range of circumstances.

Compare this difference between science and mythology to the way that Elkhonon Goldberg describes updating in the hemispheres:

… the right hemisphere, the novelty hemisphere, is modified by experience at a much slower rate than the left hemisphere, the hemisphere of established cognitive routines. The conclusion may seem counterintuitive at first glance, since the right hemisphere is the novelty hemisphere and the left hemisphere is the hemisphere of established cognitive routines. But at a deeper level of analysis, the slow-changing right hemisphere is better suited for dealing with novelty precisely because it contains an averaged default representation capturing certain shared, and thus poorly differentiated, features of many prior experiences. When the specific knowledge about the situation at hand is lacking, you fare best by doing whatever worked in the largest number of prior experiences. But once you have figured out the properties of the specific situation at hand, you tailor your response accordingly and store the newly gained experience. (2016, p. 71).

In this way, science and mythology seem to line up nicely with hemispheric differences. Science updates quickly and scientific knowledge can be extremely useful in narrow domains but does not necessarily generalize very well. Mythological narratives update extremely slowly and represent the pattern of behavior necessary for dealing appropriately with novel and unpredictable circumstances. If Jordan Peterson’s theory about the cultural evolution of mythology is correct, then describing the hero myth in the way that Goldberg described the right hemisphere, as “an averaged default representation capturing certain shared, and thus poorly differentiated, features of many prior experiences” seems pretty accurate.

In The Master and His Emissary, McGilchrist also suggests that science and mythology would be associated with left and right hemisphere points of view:

Science has to prioritise clarity; detached, narrowly focussed attention; the knowledge of things as built up from parts; sequential analytic logic as the path to knowledge; and the prioritising of detail over the bigger picture. Like philosophy it comes at the world from the left hemisphere’s point of view. (chapter 5)

… by contrast, metaphor and narrative are often required to convey the implicit meanings available to the right hemisphere... (preface)

Addressing the Death of God

Some of the thinkers who have been attempting to address the crisis of modernity (e.g., John Vervaeke, Jordan Peterson, Iain McGilchrist) have, I believe, converged on an extremely similar understanding of this cultural crisis and how to address it. But like the blind men trying to identify the elephant, each have grasped a particular aspect of the problem and don’t necessarily see how it is that they are all grasping the same entity. For John Vervaeke, the problem is understood as the meaning crisis and the proposed solution is tied up with relevance realization and cultivating wisdom. For Jordan Peterson in Maps of Meaning, the problem was largely about the conflict between scientific and mythological conceptions of the world with the solution being a scientific and philosophical understanding of the meaning of mythology. For Iain McGilchrist in The Master and His Emissary, the problem was portrayed as a conflict between the modes of attention characterizing the cerebral hemispheres and the solution is to re-incorporate the right hemisphere mode of attention into Western culture. I think that all of these are different manifestations of the same underlying problem. I hope that I have adequately demonstrated the connections between these different ways of thinking about the problem in this post.

To recap those connections: I have drawn on my recent paper with John Vervaeke and Mark Miller to argue that the fundamental difference between the hemispheres can be thought of as a difference in how they realize relevance. If our culture is tilted towards the left hemisphere mode, as Iain McGilchrist argues, this would manifest as a problem in our ability to realize relevance (i.e., to identify what is important and what is not). We will be too focused on narrow details and specialized knowledge at the cost of seeing the big picture. We will privilege efficiency over resiliency. Furthermore, I argued that the differences between science and mythology are analogous to the differences between the hemispheres as described by the Janus model. Science (and the left hemisphere) create highly precise models that update quickly and can be used to predict and control the world around us. Mythology (and the right hemisphere) consists of a less detailed model that updates slowly and can generalize to many different situations. This means that the central problem of Jordan Peterson’s 1999 book Maps of Meaning, which consisted of reconciling the tension between scientific and mythological understandings of the world, is a manifestation of the same problem that Iain McGilchrist described in The Master and His Emissary. It is the problem of how to reconcile the modes of experience that characterize the right and left hemisphere.

I believe that the problems posed by Vervaeke, Peterson, and McGilchrist are, despite their apparent differences, ultimately the same at bottom (which is not to say that there aren’t any legitimate disagreements between them). They are all describing different aspects of the problem that Nietzsche recognized more than 100 years ago. In what amounts to a guttural scream, Nietzsche attempted to convey to us the magnitude and historical importance of our situation:

The madman jumped into their midst and pierced them with his eyes. “Whither is God?” he cried; “I will tell you. We have killed him — you and I. All of us are his murderers. But how did we do this? How could we drink up the sea? Who gave us the sponge to wipe away the entire horizon? What were we doing when we unchained this earth from its sun? Whither is it moving now? Whither are we moving? Away from all suns? Are we not plunging continually? Backward, sideward, forward, in all directions? Is there still any up or down? Are we not straying as through an infinite nothing? Do we not feel the breath of empty space? Has it not become colder? Is not night continually closing in on us? Do we not need to light lanterns in the morning? Do we hear nothing as yet of the noise of the gravediggers who are burying God? Do we smell nothing as yet of the divine decomposition? Gods, too, decompose. God is dead. God remains dead. And we have killed him.

“How shall we comfort ourselves, the murderers of all murderers? What was holiest and mightiest of all that the world has yet owned has bled to death under our knives: who will wipe this blood off us? What water is there for us to clean ourselves? What festivals of atonement, what sacred games shall we have to invent? Is not the greatness of this deed too great for us? Must we ourselves not become gods simply to appear worthy of it? There has never been a greater deed; and whoever is born after us—for the sake of this deed he will belong to a higher history than all history hitherto.” (The Gay Science, 125)

The loss of our mythological worldview, which was associated with a rich corpus of ritual, music, architecture, and art has left us in a state of cultural confusion. Which way is up? Which way is down? We no longer have any shared sense of who we are, where we are, and towards what aim we ought to devote ourselves. The result is a fragmented worldview and a fragmented culture.

A shared goal, narrative, or value is missing, and because it is missing we have no reason to cooperate and communicate at scale. Evolutionary developmental psychologist Michael Tomasello, in his 2010 book The Origins of Human Communication, argued that communication is fundamentally a cooperative endeavor and that real communication relies on common ground and shared intentionality. As he put it:

The ability to create common conceptual ground— joint attention, shared experience, common cultural knowledge—is an absolutely critical dimension of all human communication…

Human communication is thus a fundamentally cooperative enterprise, operating most naturally and smoothly within the context of (1) mutually assumed common conceptual ground, and (2) mutually assumed cooperative communicative motives…

In general, shared intentionality is what is necessary for engaging in uniquely human forms of collaborative activity in which a plural subject “we” is involved: joint goals, joint intentions, mutual knowledge, shared beliefs—all in the context of various cooperative motives. (pp. 5-7)

Does our culture have any “mutually assumed common conceptual ground”? I can’t see that we do. In that sense, our collective inability to reasonably communicate about important cultural issues is unsurprising. For most of our recent ancestors, that joint “we” was facilitated by a shared conception of God (although it could also involve patriotism, shared ideology, etc.). Within the Christian worldview, “we” were participating in the process of bringing about God’s kingdom on earth. The death of God represents the death of this overarching structure which gave us direction as individuals and facilitated our cooperation as a group. At this point, Western culture is so fragmented that it’s hard to even identify it as “our culture”. It’s more like a multitude of cultures that just so happen to have a vaguely shared history and geographical location. I suspect that this cultural fragmentation is unsustainable in the long run.

But what can we do? I agree with John Vervaeke and colleagues that we cannot simply go back to what we had before, and I wouldn’t want to do that even if we could:

In the West, we are realizing with divisive discomfort that our Judeo-Christian model of meaning, which occupied our teleological awareness for over a millennium, was unprepared for the post-scientific world into which it was ushered. In our contemporary division of the sciences, burgeoning naturalistic accounts supported by a dawning comprehension of the brain, much of our scriptural teleology no longer satisfies us to our core, and our previously personified, cooperative universe can no longer be trusted to hold us in its favour. At no one moment in particular, we as a civilization lost the anchorage by which we could govern and organize a sense of the Absolute. (2017, pp. 45-46)

We cannot go back to a naive teleological worldview, but perhaps these mythological narratives, which supported the great civilizations of the past, have some wisdom we can make use of.

Looking to the past for wisdom in the present

The hero myth is supposed to describe “what to do when you no longer know what to do”. So, what does that actually look like?

In the first volume of his classic text A History of Religious Ideas, Mercia Eliade described the Egyptian myth of Osiris as such (keep in mind that Horus plays the role of hero in this myth):

Despite certain inconsistencies and contradictions, which can be explained by the tensions and syncretisms that preceded the final victory of Osiris, his central myth can easily be reconstructed. According to all the traditions, he was a legendary king, famous for the energy and justice with which he governed Egypt. Seth, his brother, set a snare for him and succeeded in murdering him. His wife, Isis, a "great magician," manages to become pregnant by the dead Osiris. After burying his body, she takes refuge in the Delta; there, hidden in the papyrus thickets, she gives birth to a son, Horus. Grown up, Horus first makes the gods of the Ennead recognize his rights, then he attacks his uncle.

At first, Seth is able to tear out one of his eyes, but the combat continues, and Horus finally triumphs. He recovers his eye and offers it to Osiris… After his victory, Horus goes down to the land of the dead and announces the good news: recognized as his father's legitimate successor, he is crowned king. It is thus that he "awakens" Osiris; according to the texts, "he sets his soul in motion."

It is especially this last act of the drama that throws light on the mode of being characteristic of Osiris. Horus finds him in a state of unconscious torpor and is able to reanimate him. "Osiris! look! Osiris! listen! Arise! Live again!"… After his coronation — that is, after he has put an end to the period of crisis ("chaos") — Horus reanimates him: "Osiris! thou wert gone, but thou hast returned; thou didst sleep, but thou hast been awakened; thou didst die, but thou livest again!" (pp. 97-98)

This story, like other hero myths, is describing an eternal pattern. Everything that was once powerful and creative eventually becomes old and stale (Osiris). A tradition that was vibrant and meaningful at first will eventually become ossified and outdated. Entropy is inevitable. The forces of tyranny, chaos, or decay (Seth) will eventually come sweeping in to take advantage of that situation. But although threatening, chaos is always pregnant with new potentials and opportunities (Isis). As representatives of this new potential (Horus), our job in these moments is to confront the forces of tyranny and chaos, rescue and resuscitate our blind tradition, and help it to see again by integrating it with a conscious awareness of the present.

The scientific enterprise has undermined the propositions that our mythological worldview relied upon. I believe, however, that the scientific enterprise can also help us to put the pieces back together in order to create something better and more resilient. This does not involve naive attempts at preserving religious propositions, e.g., “creation science” (which is not science). The explicit propositions of Christianity or any other mythological worldview are mostly irrelevant and irreconcilable (it is in this sense that Richard Dawkins has a point). Propositions are relevant to worldviews only insofar as they serve as the scaffolding for communicating a truth that is, at its core, ineffable. The tao that can be told is not the eternal Tao, after all. What’s important is not the propositions, but rather what is implicit in mythological narratives (along with art, ritual, and other forms of fiction). In a letter to his father, a young Jordan Peterson attempted to describe the nature of this problem.

I don't know, Dad, but I think I have discovered something that no one else has any idea about, and I’m not sure I can do it justice. Its scope is so broad that I can see only parts of it clearly at one time, and it is exceedingly difficult to set down comprehensibly in writing. You see, most of the kind of knowledge that I am trying to transmit verbally and logically has always been passed down from one person to another by means of art and music and religion and tradition, and not by rational explanation, and it is like translating from one language to another. Its not just a different language, though — it is an entirely different mode of experience. (MoM, pp. 459-460)

As Iain McGilchrist put it, the left hemisphere tends towards “sequential analytic logic” while “metaphor and narrative are often required to convey the implicit meanings available to the right hemisphere”.

Tom Morgan once described my own work to me as something like ‘the right hemisphere trying to communicate with the left hemisphere in its own language’. Although Jordan Peterson is not discussing his work in the context of hemispheric differences, I think that is exactly what he is trying to convey in the passage above. He is attempting to demonstrate the value of ideas which have been refined over many thousands of years, which are traditionally passed down implicitly through fiction, art, and tradition. He is trying to do this in a way that is explicit, verbal, and logical. In that sense, Maps of Meaning is attempting to convey the value of the right hemisphere’s mode of experience in a way that makes sense to the left hemisphere.

Conclusion

The overlap between hemispheric differences and cultural changes shouldn’t be interpreted too literally. Obviously Western culture doesn’t have a collective brain with right hemisphere damage or something. The overlap between hemispheric differences and cultural changes reflects the fact that certain epistemic tradeoffs occur in all systems that can learn. All systems capable of learning in a complex world will be subject to the tradeoffs associated with relevance realization. Relevance realization occurs within individuals, but it also occurs collectively in cultures through distributed cognition and cultural evolution. Western culture may not be dealing with these tradeoffs appropriately (for whatever reason) and therefore it appears to have taken on some characteristics of the left cerebral hemisphere.

We live in one of the most unpredictable and uncertain periods in history. For most of human history, one could guess that the next 50 years would look pretty much like the last 50 years. Culture and technology remained relatively stable for centuries at a time. But now it is obvious that the world 50 years from now will look very different from the world today, just as the world today looks very different from the world 50 years ago.

During predictable, stable periods, developing the cognitive style of the left hemisphere is important. Precision, control, and explicit planning are most useful during periods of stability. During periods of unpredictability and instability, however, it is necessary to have a different strategy. In that case we need, in Joseph Dien’s words, “a unitary view of the past in order to immediately detect and respond to novel and unexpected events”, which I have argued was once the role of mythology.

The loss of our mythological worldview represents both a crisis and an opportunity. I have previously argued that postmodern nihilism is analogous to the “descent into chaos” or the increase in entropy that precedes an insight or phase change. By allowing us to rethink first principles, cultural nihilism provides an opportunity for a more functional and resilient worldview to emerge. This worldview must, as Jordan Peterson recognized, reconcile the scientific and mythological points of view.

In declaring the death of God, Nietzsche knew that he had come too early. Western intellectual culture was not yet ready for what he had to say. He was a man who, in his own prophetic words, would be “born posthumously”.

Here the madman fell silent and looked again at his listeners; and they, too, were silent and stared at him in astonishment. At last he threw his lantern on the ground, and it broke into pieces and went out. “I have come too early,” he said then; “my time is not yet. This tremendous event is still on its way, still wandering; it has not yet reached the ears of men. Lightning and thunder require time; the light of the stars requires time; deeds, though done, still require time to be seen and heard. This deed is still more distant from them than the most distant stars—and yet they have done it themselves.” (GS 125)

That was 140 years ago. I wonder, has the madman’s time come yet?

If you read this passage from Peterson’s Maps of Meaning through the hemispheric lens it takes on a significance that I think of practically weekly. Perhaps the censor is his right hemisphere, connected to the Tao, myth. And the left the analytical, articulate intellectual, but abstracted and confabulating. I believe that battle still wages on within him, and you can hear it in the two different ways he speaks.

He’s writing about a breakdown in his earlier life:

“Something odd was happening to my ability to converse. I had always enjoyed engaging in arguments, regardless of topic. I regarded them as a sort of game (not that this is in any way unique). Suddenly, however, I couldn’t talk - more accurately, I couldn’t stand listening to myself talk. I started to hear a “voice” inside my head, commenting on my opinions. Every time I said something, it said something - something critical. The voice employed a standard refrain, delivered in a somewhat bored and matter-of-fact tone:

You don’t believe that.

That isn’t true.

You don’t believe that.

That isn’t true.

The “voice” applied such comments to almost every phrase I spoke. I couldn’t understand what to make of this. I knew the source of the commentary was part of me, but this knowledge only increased my confusion. Which part, precisely, was me - the talking part or the criticizing part? If it was the talking part, then what was the criticizing part? If it was the criticizing part - well, then: how could virtually everything I said be untrue? In my ignorance and confusion, I decided to experiment. I tried only to say things that my internal reviewer would pass unchallenged.

This meant that I really had to listen to what I was saying, that I spoke much less often, and that I would frequently stop, midway through a sentence, feel embarrassed, and reformulate my thoughts. I soon noticed that I felt much less agitated and more confident when I only said things that the “voice” did not object to. This came as a definite relief. My experiment had been a success; I was the criticizing part. Nonetheless, it took me a long time to reconcile myself to the idea that almost all my thoughts weren’t real, weren’t true - or, at least, weren’t mine.

All the things I “believed” were things I thought sounded good, admirable, respectable, courageous. They weren’t my things, however - I had stolen them. Most of them I had taken from books. Having “understood” them, abstractly, I presumed I had a right to them - presumed that I could adopt them, as if they were mine: presumed that they were me. My head was stuffed full of the ideas of others; stuffed full of arguments I could not logically refute. I did not know then that an irrefutable argument is not necessarily true, nor that the right to identify with certain ideas had to be earned.”

Brett,

Wonderful synthesis as always. In my own thinking I've been wrestling a lot with the " ... and thus" of these arguments, having spent a year or three in the well of incredible work that the authors you mention (yourself included) have been doing. Certainly John's "After Socrates" which I've been enjoying immensely is a very individual "... and thus." But to me the most interesting set of idea-meets-reality on this topic has been in AI. I would *love* to get your thoughts on how transformer-based LLMs have kind of reverse-engineered a bit of this -- quite literally improving their performance by learning how to tell what's important.

To my deeply ignorant and uneducated perspective (English major here), this seems to be right down the middle of the discussion. Everyone seems to be experiencing first hand the emergent properties of complex systems ... would love any connections your smarty-pants group of followers may be able to surface.